Planning with Large Language Models for Code Generation

We were joined by the authors Shun Zhang and Zhenfang Chen today.

We first highlighted some questions related to the paper

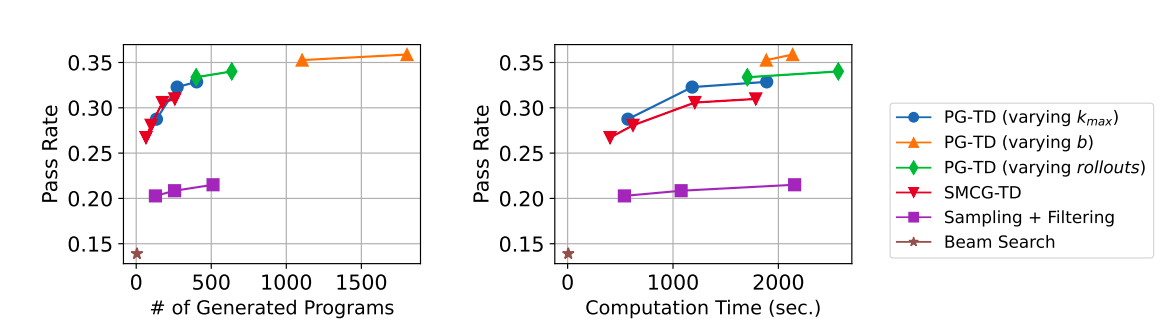

- Beam search vs. MCTS: see results in the graph below. Intuitively, beam search is much faster, but MCTS puts more emphasis on long-term results (by doing full rollouts)

- Maybe you can combine sampling with beam search? For example, sample first, and then run beam search.

- The MCTS sampling approach is a bit difficult to parallelize.

- With longer computation time, sampling + filtering is able to reach higher pass rates (it’s about linear)

- Computation time plot:

Ideas around test cases:

- Test cases are generated from the natural language description alone

- Would it be helpful to put more test cases in the prompt?

- Is it easier to generate test cases than to generate code?

- Maybe we could train another network to adversarially generate test cases?

- How about using more assert statements? Authors noted that model doesn’t write code, it just makes more test cases.

- Relevant work: CodeT (https://arxiv.org/abs/2207.10397), AlphaCode

Ideas around human feedback:

- The premise: given two options (pi * radius * radius vs. 3.14 * radius * radius), which one would a human prefer?

- There may be a lot of feedback needed.

- How do we best use the human as feedback?

- Maybe people have their own preferences (coding style?)

- Passing test cases may not be the human desiderata

- You can also use human feedback on top of the existing model

Ideas around reward function design:

- Authors experimented with minimizing length of code and maximizing comments in code.

- Does increased pass rate really mean we’re doing program synthesis? Is it really better to pass more test cases even if we didn’t solve the problem fully?

- Would using pass@k help instead of using pass rate? It’s a sparser signal, so they chose pass rate instead

- Could we integrate the stack trace in the reward? Would it help?

Could we use this framework without prefixes?

- Infilling?